Overview

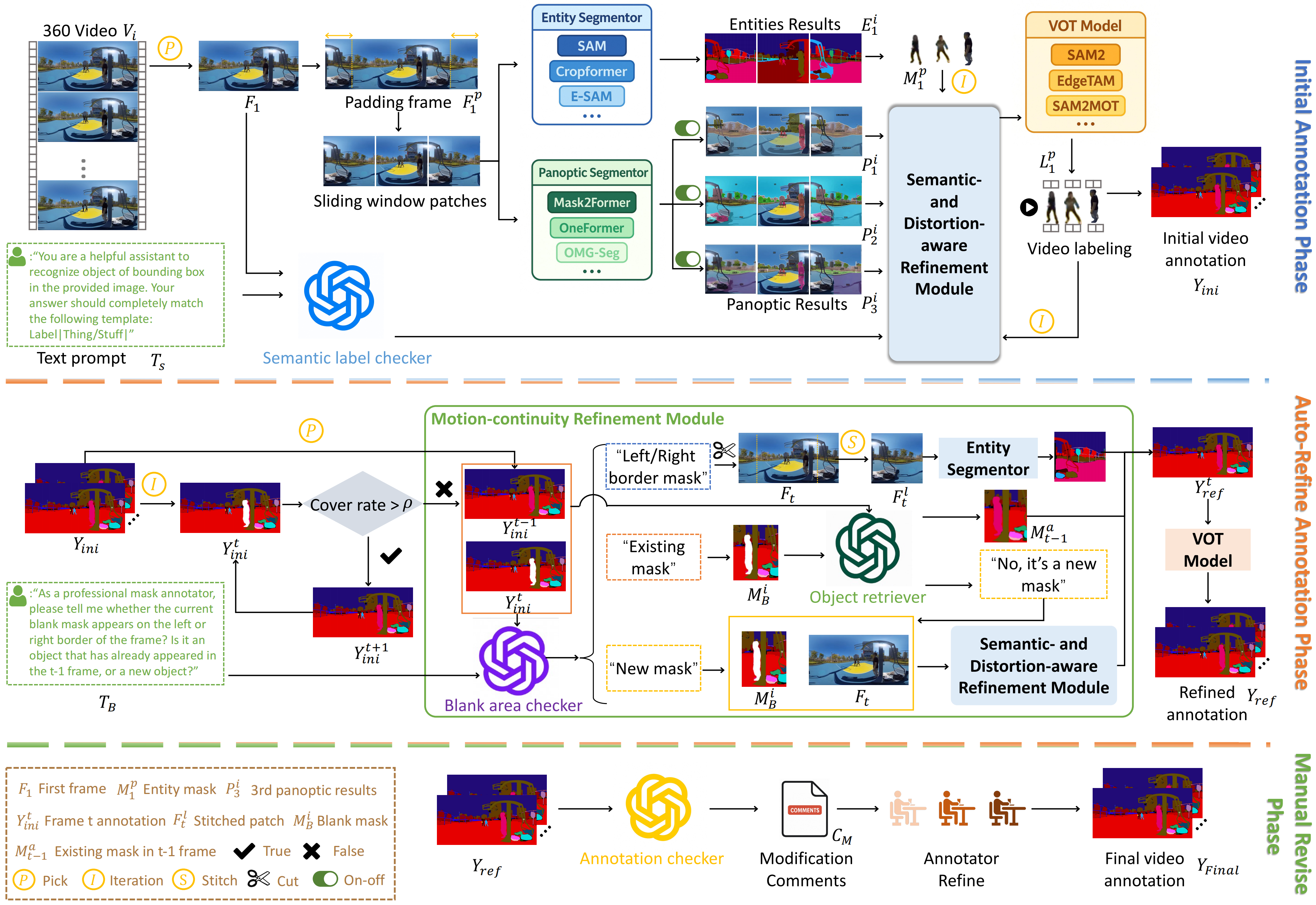

A$^3$360V Pipeline is an automatic labeling pipeline, which subtly coordinates pre-trained 2D segmentors and large language models (LLMs) to facilitate the labeling. The pipeline operates in three novel stages.

Initial Annotation Phase: We first extract keyframes and use multiple 2D segmentors (e.g., CropFormer , OneFormer) to generate semantic and instance segmentation proposals. These outputs are unified and aligned via LLM-based semantic matching, then refined through a Semantic- and Distortion-aware Refinement (SDR) Module that leverages SAM2 to produce high-quality panoramic masks.

Auto-Refine Annotation Phase: For subsequent keyframes in the video, we iteratively propagate annotations and identify low-quality regions based on mask coverage. Frames failing coverage thresholds are reprocessed using a GPT-guided Motion-Continuity Refinement (MCR) module, which resolves annotation inconsistencies across left-right ERP and recovers missing masks caused by occlusion or distortion.

Manual Revise Phase: Finally, human annotators validate and correct the outputs from the previous stages. Multi-annotator review ensures consistency and completeness across frames, producing the final high-quality annotations.

For more specific pipeline data, please refer to the paper. We hope our research can be helpful to subsequent researchers.